ASSESSMENT at Chandler-Gilbert Community College Overview

|

What is assessment?

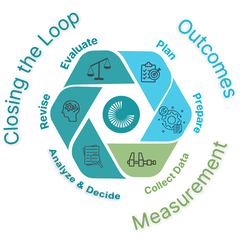

First and foremost, assessment is done to improve student learning. Assessment is the systematic, ongoing process of establishing clear, measurable outcomes of student learning; the collection, analysis, interpretation, and use of information to understand and improve teaching and learning; ensuring that students have sufficient opportunities to achieve those outcomes. Assessments are guided by outcomes and indicators that have been selected by the institution, district, division, or discipline. Instruments are used to produce objective data based on the selected outcomes and indicators. The data are analyzed to determine areas of strength, weakness, concern, excellence, or any other desired criteria. |

What does assessment at Chandler-Gilbert look like?

|

|

Culture of Assessment

At Chandler-Gilbert Community College, we are committed to a culture of assessment in which we seek continuous improvement to support student success.

Assessment is focused on a concept called “Continuous Improvement.” This means that assessments are continuously conducted and revised based on the data collected. The changes are usually minor from year-to-year with more monumental changes occurring over multiple assessment cycles. Expect some revision during each assessment cycle but the change should be incremental and manageable.

Steps Toward Creating a "Culture of Assessment" at an Institution*

Where is your unit in developing a culture of assessment?

*Adapted from Allen, M. J. (2004). Assessing academic programs in higher education, p.7. Anker Publishing Company: Bolton, MA.

Chandler-Gilbert Community College will not use assessment results punitively or as a means of determining faculty

or staff standing, position, or staffing or hiring preference. The purpose of assessment is to measure student learning,

not to evaluate faculty or staff.

Chandler-Gilbert Community College will not use assessment in a way that will impinge upon the academic freedom or professional

rights of faculty. Individual faculty members must continue to exercise their best professional judgment in matters

of pedagogy, curriculum, and assessment.

Chandler-Gilbert Community College will not assume that assessment can answer all questions about all students.

Chandler-Gilbert Community College will not use assessment merely to be accountable to external entities.

Assessment must involve ongoing observation of what we believe is important for learning.

The major purpose of Program Review is to ensure high quality educational programs. Program Review is a process that evaluates the status, effectiveness, and progress of programs and helps identify the future direction, needs, and priorities of those programs. It should integrate assessment of student learning into plans to promote positive and forward-looking change in programs.

Program Review is closely connected to strategic planning, resource allocation, and other decision-making at the program, division, and college-level. The Program Review process should focus on improvements that can be made using resources that currently are available to the program. In cases where proposed program improvements and expansions that would require additional resources, need and priority for additional resources should be clearly specified.

Program Reviews should be completed every 5 years, unless a different schedule is dictated by an outside accreditation body.

Please visit the Planning and Research website to access the planning templates and other documents required for Program Review.

Canvas

The Canvas LMS (Learning Management System) is the chosen tool for quantitative assessment at CGCC. Check out the Assessment Guides or contact the CTLA to learn more about using this tool for assessment. All SLO assessment data is collected via Canvas.

Rubrics

Rubrics are the preferred way to collect assessment data at CGCC. A rubric that clearly aligns to the chosen outcome and indicators enables a consistent set of parameters to be applied across the entire student population. These rubrics can produce objective student data that will be used during assessment. You may create your own or use one of the SLO templates available from the SLOAC Canvas Course.

| Alignment | The degree to which student experiences, assignment(s) and instrumentation (rubrics) align to the Student Learning Outcome(s). |

| Analytic Scoring | Scoring that divides the student work into elemental, logical parts or basic principles. Scorers evaluate student work across multiple dimensions of performance rather than from an overall impression (holistic scoring). In analytic scoring, individual scores for each dimension are determined and reported; however, an overall impression of quality may be included. (P.A. Gantt; CRESST Glossary) See also: Holistic Scoring. Example: analytic scoring of a history essay might include scores of the following dimensions: use of prior knowledge, application of principles, use of original source material to support a point of view, and composition. |

| Anchors | A sample of student work that exemplifies a specific level of performance. Raters use anchors to score student work, usually comparing the student performance to the anchor. Example: if student work was being scored on a scale of 1-5, there would typically be anchors (previously scored student work) exemplifying each point on the scale. (CRESST Glossary) |

| Application | The minimum level of design requirement to attain the outcome of SLO assessment. Application requires students to take what they know and can do (knowledge and skills) and apply that to a circumstance set up in the assignment. This creates the condition of transfer, a necessary condition for valid conclusions of student performances. |

| Artifacts | Documents or assignments that provide the raw data for assessment. |

| Assessment | "Assessment is the systematic collection, review, and use of information about educational programs undertaken for the purpose of improving student learning and development." (Palomba & Banta, 1999) |

| Assessment for Accountability | The assessment of some unit (could be a program, department, or entire institution) conducted to satisfy external stakeholders. Results are summative and often compared across units. (Leskes, A., 2002). Example: to retain state approval, graduates of the school of education must achieve a 90 percent pass rate or better on teacher certification tests. |

| Assessment for Improvement | Assessment that feeds directly back into revising the course, program, or institution to improve student learning results. (Leskes, A., 2002) |

| Assessment Plan | A document that outlines the

A plan for a specific assessment activity/project will include the following:

(adapted from the Northern Illinois University Assessment Glossary) |

| Assignment | The tasks assigned to students for the purpose of assessing student performances and origination of artifacts. |

| Authentic Assessment | Determining the level of student knowledge/skill in a particular area by evaluating his/her ability to perform a "real world" task in the way professionals in the field would perform it. Authentic assessment asks for a demonstration of the behavior the learning is intended to produce.

Example: asking students to create a marketing campaign and evaluating that campaign instead of asking students to answer test questions about characteristics of a good marketing campaign. |

| Benchmark | A point of reference for measurement; a standard of achievement against which to evaluate or judge performance. |

| Capstone Course/Experience | An upper-division class designed to help students demonstrate comprehensive learning in the major through some type of product or experience. In addition to emphasizing work related to the major, capstone experiences can require students to demonstrate how well they have mastered important learning objectives from the institution's general studies programs. (Palomba & Banta, 1999) |

| Closing the loop | Using assessment results for improvement and/or evolution. |

| Competency | The demonstration of the ability to perform a specific task or achieve specified criteria. (James Madison University Dictionary of Student Outcomes Assessment) |

| Continuous Improvement | Consistently implementing changes based on assessment that lead to improvement by meeting chosen outcomes. |

| Course Assessment | Assessment to determine the extent to which a specific course is achieving its learning outcomes. |

| Criteria for Success | The minimum requirements for a program to declare itself successful. Example: 70% of students score 3 or higher on a lab skills assessment. |

| Criterion-referenced | Assessment where student performance is compared to a pre-established performance standard (and not to the performance of other students). (CRESST Glossary) See also: Norm-referenced. |

| Curriculum Map | A matrix showing the coverage of each program learning outcome in each course. |

| Direct Assessment | Collecting data/evidence on students' actual behaviors or products. Direct data-collection methods provide evidence in the form of student products or performances. Such evidence demonstrates the actual learning that has occurred relating to a specific content or skill. (Middle States Commission on Higher Education, 2007). See also: Indirect Assessment Examples: exams, course work, essays, oral performance. |

| Embedded Assessment | Collecting data/evidence on program learning outcomes by extracting course assignments. It is a means of gathering information about student learning that is built into and a natural part of the teaching-learning process. The instructor evaluates the assignment for individual student grading purposes; the program evaluates the assignment for program assessment. When used for program assessment, typically someone other than the course instructor uses a rubric to evaluate the assignment. (Leskes, A., 2002) See also: Embedded Exams and Quizzes. |

| Embedded Exams and Quizzes | Collecting data/evidence on program learning outcomes by extracting a course exam or quiz. Typically, the instructor evaluates the exam/quiz for individual student grading purposes; the program evaluates the exam/quiz for program assessment. Often only a section of the exam or quiz is analyzed and used for program assessment purposes. See also: Embedded Assessment. |

| Evaluation | A value judgment. A statement about quality. |

| Focus Group | A qualitative data-collection method that relies on facilitated discussions, with 3-10 participants who are asked a series of carefully constructed open-ended questions about their attitudes, beliefs, and experiences. Focus groups are typically considered an indirect data-collection method. |

| Formative Assessment | Ongoing assessment that takes place during the learning process. It is intended to improve an individual student's performance, program performance, or overall institutional effectiveness. Formative assessment is used internally, primarily by those responsible for teaching a course or developing and running a program. (Middle States Commission on Higher Education, 2007) See also: Summative Assessment |

| Goals | General expectations for students. Effective goals are broadly stated, meaningful, achievable, and assessable. |

| Grading | The process of evaluating students, ranking them, and distributing each student's value across a scale. Typically, grading is done at the course level. |

| High Stakes Assessment | Any assessment whose results have important consequences for students, teachers, programs, etc. For example, using results of assessment to determine whether a student should receive certification, graduate, or move on to the next level. Most often the instrument is externally developed, based on set standards, carried out in a secure testing situation, and administered at a single point in time. (Leskes, A., 2002) Examples: exit exams required for graduation, the bar exam, nursing licensure. |

| Holistic Scoring | Scoring that emphasizes the importance of the whole and the interdependence of parts. Scorers give a single score based on an overall appraisal of a student's entire product or performance. Used in situations where the demonstration of learning is considered to be more than the sum of its parts and so the complete final product or performance is evaluated as a whole. (P. A. Gantt) See also: Analytic Scoring |

| Indicators | Specific criteria adopted by CGCC that expand the SLOs into measurable performance statements by which students demonstrate proficiency with any one Student Learning Outcome. The indicators serve as a structure for SLO measurement. |

| Indirect Assessment | Collecting evidence/data through reported perceptions about student mastery of learning outcomes. Indirect methods reveal characteristics associated with learning, but they only imply that learning has occurred. (Middle States Commission on Higher Education) See also: Direct Assessment Examples: surveys, interviews, focus groups. |

| Learning outcomes | Statements that identify the knowledge, skills, or attitudes that students will be able to demonstrate, represent, or produce as a result of a given educational experience. There are three levels of learning outcomes: course, program, and institution. |

| Levels of Quality | The range of student performance quality is described in the rubric. Each ‘cell’ includes a descriptor of quality. The descriptors have consistent instrumentality in the language of student performance; a requirement for a valid and reliable instrument. |

| Norm-referenced | Assessment where student performances are compared to a larger group. In large-scale testing, the larger group, or "norm group" is usually a national sample representing a wide and diverse cross-section of students. The purpose of a norm-referenced assessment is usually to sort or rank students and not to measure achievement against a pre-established standard. (CRESST Glossary) See also: Criterion-referenced. |

| Norming | Also called "rater training." The process of educating raters to evaluate student performance and produce dependable scores. Typically, this process uses criterion-referenced standards and analytic or holistic rubrics. Raters need to participate in norming sessions before scoring student performance. (Mount San Antonio College Assessment Glossary) |

| Objective | Clear, concise statements that describe how students can demonstrate their mastery of program goals. (Allen, M., 2008) Note: on the CTLA Assessment web site, "objective" and "outcome" are used interchangeably. |

| Outcomes | Clear, concise statements that describe how students can demonstrate their mastery of program goals. (Allen, M., 2008) Note: on the Mānoa Assessment web site, "objective" and "outcome" are used interchangeably. |

| Performance Assessment | The process of using student activities or products, as opposed to tests or surveys, to evaluate students' knowledge, skills, and development. As part of this process, the performances generated by students are usually rated or scored by faculty or other qualified observers who also provide feedback to students. Performance assessment is described as "authentic" if it is based on examining genuine or real examples of students' work that closely reflects how professionals in the field go about the task. (Palomba & Banta, 1999) |

| Portfolio | A type of performance assessment in which students' work is systematically collected and carefully reviewed for evidence of learning. In addition to examples of their work, most portfolios include reflective statements prepared by students. Portfolios are assessed for evidence of student achievement with respect to established student learning outcomes and standards. (Palomba & Banta, 1999) |

| Program Assessment | An on-going process designed to monitor and improve student learning. Faculty: a) develop explicit statements of what students should learn (i.e., student learning outcomes); b) verify that the program is designed to foster this learning (alignment); c) collect data/evidence that indicate student attainment (assessment results); d) use these data to improve student learning (close the loop). (Allen, M., 2008) |

| Reliability | In the broadest sense, reliability speaks to the quality of the data collection and analysis. It may refer to the level of consistency with which observers/judges assign scores or categorize observations. In psychometrics and testing, it is a mathematical calculation of consistency, stability, and dependability for a set of measurements. |

| Rubrics | A tool often shaped like a matrix, with criteria on one side and levels of achievement across the top used to score products or performances. Rubrics describe the characteristics of different levels of performance, often from exemplary to not-evident. The criteria are ideally explicit, objective, and consistent with expectations for student performance. Rubrics are meaningful and useful when shared with students before their work is judged so they better understand the expectations for their performance. Rubrics are most effective when coupled with benchmark student work or anchors to illustrate how the rubric is applied. Rubrics are instruments that can be used to score student performance artifacts. These scores become the data for assessment. |

| Standard | In K-12 education, Education, and other fields, standard is synonymous with outcome. |

| Student Learning Outcomes (SLO) | The general education outcomes adopted by CGCC are: Critical Thinking, Oral Communication, Information Literacy, and Personal Development. In general, they are statements of what students will be able to think, know, do, or feel because of a given educational experience. |

| Student Products | The specific artifacts such as papers, performances, constructs or other means that student display their levels of performance against the SLOs. |

| Summative Assessment | The gathering of information at the conclusion of a course, program, or undergraduate/graduate career to improve learning or to meet accountability demands. The purposes are to determine whether or not overall goals have been achieved and to provide information on performance for an individual student or statistics about a course or program for internal or external accountability purposes. Grades are the most common form of summative assessment. (Middle States Commission on Higher Education, 2007) See also: Formative Assessment |

| Transfer | A condition included in the design of courses and assignments in which students are required to apply knowledge and skills in a manner different from how students acquired that knowledge and those skills. The degree of difference in the transfer and application of knowledge and skills produces a range of rigor for student to attain the products. |

| Triangulation | The use of a combination of methods in a study. The collection of data from multiple sources to support a central finding or theme or to overcome the weaknesses associated with a single method. |

| Validity | Refers to whether the interpretation and intended use of assessment results are logical and supported by theory and evidence. In addition, it refers to whether the anticipated and unanticipated consequences of the interpretation and intended use of assessment results have been taken into consideration. (Standards for Educational and Psychological Testing, 1999) |

| Value-Added Assessment | Determining the impact or increase in learning that participating in higher education had on students during their programs of study. The focus can be on the individual student or a cohort of students. (Leskes, A., 2002). A value-added assessment plan is designed so it can reveal "value": at a minimum, students need to be assessed at the beginning and the ending of the course/program/degree. |

Sources Consulted:

- Allen, M. (2008). Assessment Workshop at UH Manoa on May 13-14, 2008

- American Psychological Association, National Council on Measurement in Education, & the American Educational Research Association. (1999). Standards for educational and psychological testing. Washington DC: American Educational Research Association.

- CRESST Glossary. http://cresst.org/publications/cresst-publication-3137/

- Gantt, P.A. Portfolio Assessment: Implications for Human Resource Development. University of Tennessee.

- James Madison Dictionary of Student Outcomes Assessment. https://www.jmu.edu/curriculum/self-help/glossary.shtml/

- Leskes, A. (Winter/Spring 2002). Beyond confusion: An assessment glossary. Peer Review, AAC&U.org

- Middle States Commission on Higher Education. (2007). Student learning assessment: Options and resources (2nd Ed.). Philadelphia: Middle States Commission on Higher Education.

- Mount San Antonio College Institution Level Outcomes. http://www.mtsac.edu/instruction/outcomes/ILOs_Defined.pdf

- Northern Illinois University Assessment Terms Glossary. http://www.niu.edu/assessment/resources/terms.shtml#A

- Palomba, C.A. & Banta, T.W. (1999). Assessment essentials: Planning, implementing, and improving assessment in higher education. San Francisco: Jossey-Bass.

- University of Hawai'i Manoa. (2017). Assessment Definitions/Glossary. Retrieved from manoa.hawaii.edu/assessment/resources/definitons

Using the National Institute for Learning Outcomes Assessment Transparency Framework, our college engages in meaningful practices that demonstrate effective teaching and student accomplishments.

General education student learning outcomes and indicators

Students acquire knowledge, attitudes, and skills through their coursework and in co-curricular activities. When students graduate with a degree or certificate, transfer to a university, or enter the workforce, they should have met the four Student Learning Outcomes presented below. The learning outcomes are intended to be student-centered and flexible enough to be measured using multiple forms of assessment across multiple fields of study and student experiences, both curricular and co-curricular.

In accordance with CGCC’s mission and vision for student learning and development, it is the expectation that all academic disciplines will teach and assess the General Education Student Learning Outcomes in a manner appropriate to their field of study.

The assessment of student learning in all academic programs at Chandler-Gilbert Community College is a cornerstone measure of institutional effectiveness. The intent of the Student Learning Outcomes Assessment process is to evaluate the effectiveness of the General Education Program, and General Education courses, in developing the broad-based academic skills and values that exemplify a degree in higher education. Assessment planning and reporting is an essential component of CGCC’s process for measuring student learning in all of its course modalities. This process aligns with the Higher Learning Commission Criterion 4 by which CGCC is measured on a routine basis.

Student Learning Outcomes directly describe what a student is expected to learn as a result of participating in academic activities or experiences at the College. They focus on knowledge gained, skills and abilities acquired and demonstrated, and attitudes or values changed. Our Student Learning Outcome assessment approach is embedded in a larger practice of continuous improvement that values authentic and meaningful evidence in planning and implementing instructional changes with the ultimate goal of improving student learning.

In general, the Student Learning Outcomes Assessment process consists of three major components:

- Defining the most important goals for students to achieve as a result of participating in an academic experience (outcomes).

- Evaluating how well students are actually achieving those goals (assessment).

- Applying the results to improve the academic experience (closing the loop).

The faculty of CGCC have identified four General Education Student Learning Outcomes:

Critical thinking is the process of appropriately analyzing, evaluating, applying and synthesizing information. It is characterized by reflection, connection of new information to existing knowledge and reasoned judgment.

Indicators

- CT.1: Application

- Students can apply knowledge and skills in a different context or situation or task.

- CT.2: Analysis

- Students can organize information into its component parts.

- Students can identify relationships within information and its component parts.

- CT.3: Synthesis

- Students can assemble parts or components to create a whole.

- Students can originate through the use of parts or components.

- CT.4: Evaluation

- Students can assess the relative value of ideas and information.

- Students can justify their conclusions.

Effective speech communication is the purposeful development, expression, and reception of a message through oral and nonverbal means.

Indicators

- OC.1: Message Delivery

- Adapt the delivery of the message in context.

- How thorough and with what completion have students adapted the delivery of the message in context?

- OC.2: Audience Analysis

- Adapt the message for an audience.

- How effectively have students adapted the message for an audience?

- OC.3 Message Purpose

- Adapt the message to meet the purpose(s)

- How effectively and consistently have students adapted the message to meet the purpose(s)?

- OC.4: Verbal Interaction

- Adapt effective language in the conveyance of ideas and information.

- How effectively and with what quality have students adapted language in the conveyance of ideas and information?

- OC.5: Non-Verbal Interaction

- Utilize Paralanguage (tone, rate, volume, inflection, etc.).

- Utilize Kinesics (facial expression, gestures, and overall movement).

- How well can students apply Paralanguange and Kinesics?

- OC.6: Presentation Graphics Support

- Apply graphics to support presentation.

- How effectively do students apply graphics support to the presentation?

Information literacy is the set of integrated abilities encompassing the reflective discovery of information, the understanding of how information is produced and valued, and the use of information in creating new knowledge and participating ethically in communities of learning.

Indicators

- Inquiry Process

- IL.1.A: Adapt the delivery of the message in context.

- IL.1.B: How thorough and with what completion have students adapted the delivery of the message in context?

- IL.1.C: Formulate questions for research based on gaps or on reexamination of conflicting information.

- IL.1.D: Determine an appropriate scope of investigation.

- IL.1.E: Organize information in meaningful ways.

- IL.1.F: Determine the initial scope of the task required to meet their information needs.

- IL.1.G: Match information needs and search strategies to appropriate search tools.

- Contextual Authority

- IL.2.A: Monitor gathered information and assess for gaps or weaknesses or multiple perspectives.

- IL.2.B:Analyze materials for audience, context, and purpose.

- IL.2.C:Determine the credibility of sources based on author, content, format, assignment need, etc.

- Creation Process

- IL.3.A: Contribute to scholarly conversation at an appropriate level.

- IL.3.B: Synthesize ideas gathered from multiple sources.

- IL.3.B: Draw reasonable conclusions founded on the analysis of information.

- IL.3.B: Develop an understanding that their own choices impact the purpose for which the information product will be used and the message it conveys.

- Ethical Use

- IL.4.A: Give credit to ideas of others through proper citation and attribution.

Personal development involves engaging in life-long learning processes resulting in behaviors promoting student success as well as contributing to building a more equitable, empowered community and society in general through informed decision-making and responsible actions.

For proficiency, students will demonstrate the following as appropriate to discipline, course, or level of engagement:

Indicators

- PD.1: Recognizing learning as part of a process that includes continued development while identifying lessons learned and strategies for improvement.

- PD.2: Setting and monitoring short term and long-term goals.

- PD.3: Recognizing the role of behaviors, emotions, attitudes, and relationships in personal, academic, and community success and well-being.

- PD.4: Engaging in reciprocal process(es) that lead to identifying and seeking deeper understandings of community needs and stakeholders.

- PD.5: Contributing to the building/shaping of a more equitable, empowered community and society by developing positive relationships based on mutual respect and understanding.

- PD.6: Collaborating with community stakeholders identifying/creating plan(s) of action responding to identified needs based on informed decision-making.

- PD.7: Identifying actions taken to better one’s self, community, and society based on informed decision-making.

- PD.8: Engaging in ethical and responsible actions that are mutually beneficial to all stakeholders.

Read more about assessment at CGCC:

Assessment Process | GEAR